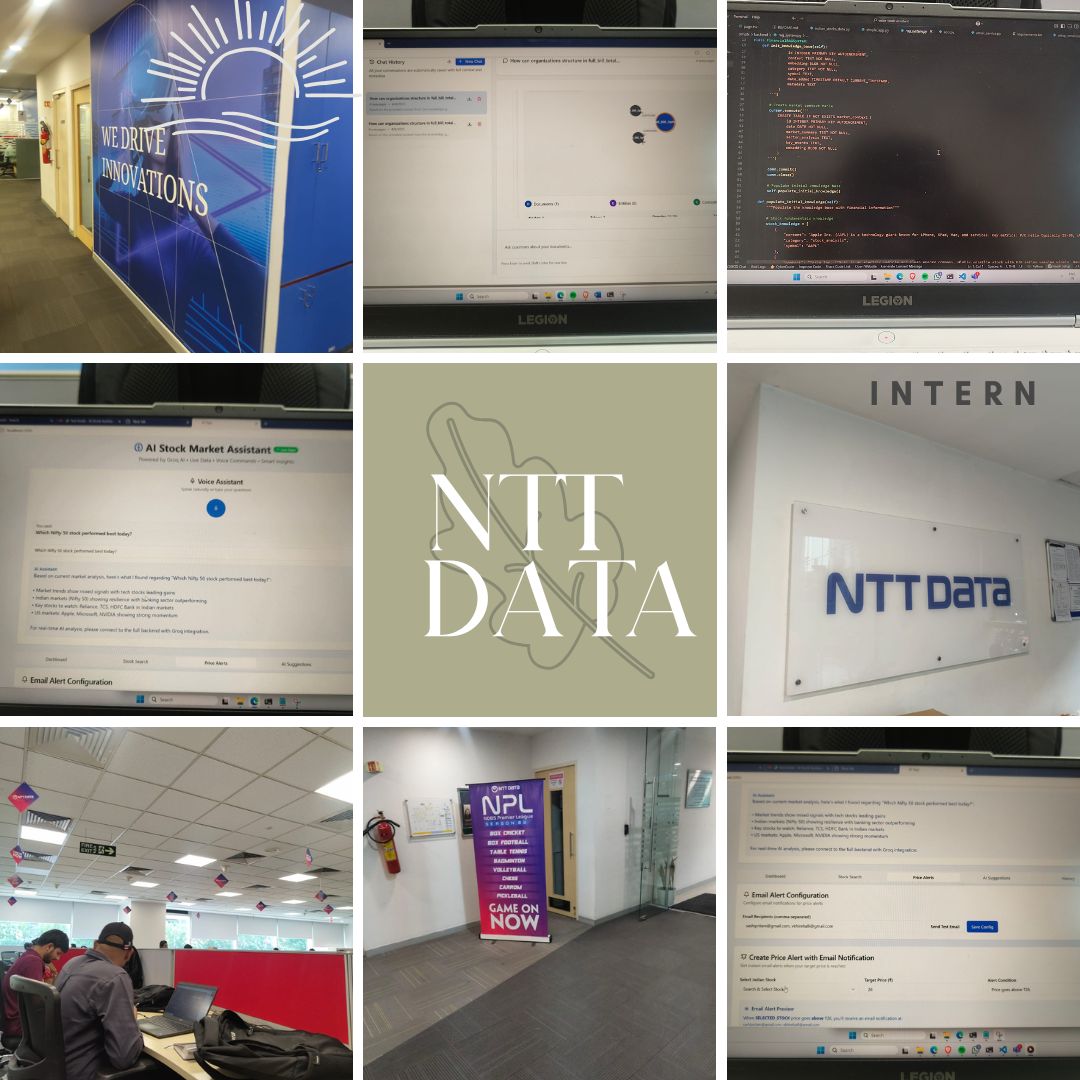

From Campus Curiosity to Real-World AI: My NTT DATA Journey

Stepping into NTT DATA felt like stepping onto a moving train: fast, purposeful, and deeply people-centric. Under mentor Vishwa's guidance, I built three production-grade AI systems in three months.

From Campus Curiosity to Real-World AI: My NTT DATA Journey

Stepping into NTT DATA felt like stepping onto a moving train: fast, purposeful, and deeply people-centric, contrasting the lecture-first rhythm of college with a build-first, mentor-backed rhythm at work. Under the guidance of mentor Vishwa, the internship blended structured learning with ownership, mirroring a culture that emphasizes growth, openness, and support across projects and teams. This was not just about shipping code—it was about learning to ask better questions, designing systems for scale, and translating AI into enterprise value.

Learning GenAI the Right Way

The first month was immersion by design: understanding how modern Generative AI works end-to-end—from data pipelines and embeddings to orchestration and evaluation—before writing the first production line of code. Neural networks took on an applied meaning through transformer architectures, attention mechanisms, and the practical tradeoffs between context length, tokenization, and latency.

Deep Dive into AI Fundamentals

- Understanding transformer architectures beyond theory

- Attention mechanisms and their real-world applications

- Context length vs. computational efficiency tradeoffs

- Tokenization strategies for different languages and domains

Large Language Models Large Language Models like Llama 3 anchored experiments, offering strong instruction following, reasoning, and code generation with an open ecosystem that enabled quick iteration and deployment. The model's instruction tuning, improved reasoning, and strong coding capabilities made it a pragmatic choice for conversational scenarios where clarity, steerability, and latency matter.

Deep Learning Applications Deep learning stopped being abstract once paired with retrieval, grounding, and tools—turning models into systems that answer with facts, follow safety rails, and scale with enterprise data. The focus shifted from model accuracy to system reliability, user experience, and business impact.

Project 1: Graph-Based RAG System

Designing a hybrid Retrieval-Augmented Generation platform meant fusing graph databases, semantic search, and LLMs to build a knowledge layer that could reason across relationships, not just text proximity. Documents across formats were parsed, entities and relationships extracted, and then projected into Neo4j to enable multi-hop reasoning and lineage-aware answers, moving beyond single-chunk lookup to connected understanding.

Technical Implementation

class GraphRAGSystem:

def __init__(self, neo4j_uri: str, groq_api_key: str):

self.graph_db = Neo4jDriver(neo4j_uri)

self.llm = GroqLLM(groq_api_key)

self.embeddings = SentenceTransformer('all-MiniLM-L6-v2')

async def process_document(self, document: str):

# Extract entities and relationships using LLM

entities = await self.llm.extract_entities(document)

relationships = await self.llm.extract_relationships(document)

# Store in graph database with embeddings

await self.graph_db.create_knowledge_graph(entities, relationships)

# Generate and store embeddings for hybrid search

embeddings = self.embeddings.encode(document)

await self.graph_db.add_embeddings(embeddings)

async def query(self, question: str):

# Hybrid retrieval: semantic + graph traversal

candidates = await self.semantic_search(question)

graph_context = await self.graph_db.multi_hop_query(candidates)

# Generate contextual answer with reasoning path

return await self.llm.generate_answer(question, graph_context)What Made Graph RAG Different

Standard vector search excels at similarity, but graph retrieval shines when questions require sequential reasoning over linked facts—precisely where multi-hop questions live. By preconnecting knowledge via an extraction pipeline into a graph, many multi-step questions can be answered reliably at query time without brittle prompt gymnastics, and with clearer explainability through traversals.

- **Multi-hop reasoning** across connected entities

- **Explainable answers** with clear reasoning paths

- **Dynamic knowledge updates** without retraining

- **Relationship-aware context** for better accuracy

The result was a context-rich enterprise search experience that surfaced answers with why and how, improving accuracy and trust while keeping the architecture modular for future extensions like agents and graph algorithms.

Project 2: AI Stock Market Assistant

Building a voice-first web assistant for Indian retail investors combined LLM-powered reasoning with live data to reduce the friction between curiosity and action. Powered by Llama 3 for natural dialog and yfinance for real-time prices, the system enabled voice queries, personalized guidance based on risk profiles, and alerting with spam control to keep signals meaningful.

System Architecture

interface StockAssistant {

// Voice processing pipeline

speechToText: (audio: Blob) => Promise<string>

textToSpeech: (text: string) => Promise<AudioBuffer>

// Market data integration

getRealTimeData: (symbol: string) => Promise<StockData>

getHistoricalData: (symbol: string, period: string) => Promise<HistoricalData>

// AI-powered analysis

analyzeStock: (symbol: string, userProfile: UserProfile) => Promise<Analysis>

generateAlerts: (watchlist: string[]) => Promise<Alert[]>

// Risk assessment

assessRisk: (portfolio: Portfolio, userProfile: UserProfile) => Promise<RiskAssessment>

}Key Features Implemented

- Real-time speech recognition for natural queries

- Text-to-speech responses with Indian accent support

- Hands-free operation for busy professionals

- Risk profile assessment and recommendations

- Portfolio analysis with diversification suggestions

- Market trend explanations in simple language

- Price movement notifications with context

- Spam control to prevent alert fatigue

- Customizable alert thresholds based on user preferences

The payoff was an engaging, hands-free experience that made market intelligence approachable, pairing interactive analytics with grounded, up-to-date context for everyday decisions.

Project 3: Prompt-to-API Generator

Translating plain English prompts into production-ready FastAPI backends showed how GenAI can collapse the distance between intent and software. Using FLAN-T5 for task-oriented generation and a Next.js + React + Tailwind front-end, the tool produced working endpoints, React components for the generated APIs, and one-click downloads to accelerate prototyping.

Technical Innovation

class PromptToAPIGenerator:

def __init__(self):

self.model = FLAN_T5_Large()

self.code_templates = CodeTemplateLibrary()

self.validator = CodeValidator()

async def generate_api(self, prompt: str) -> GeneratedAPI:

# Parse intent and extract requirements

requirements = await self.parse_requirements(prompt)

# Generate FastAPI code

api_code = await self.generate_fastapi_code(requirements)

# Generate corresponding React components

frontend_code = await self.generate_react_components(requirements)

# Validate and test generated code

validation_result = await self.validator.validate(api_code)

return GeneratedAPI(

backend_code=api_code,

frontend_code=frontend_code,

tests=validation_result.tests,

documentation=self.generate_docs(requirements)

)Impact and Use Cases

This workflow targeted hackathons and early-stage projects, with a roadmap for auto-documentation and model extensibility that could turn weekend experiments into deployable services.

- Rapid prototyping for hackathons

- MVP development for startups

- Educational projects for students

- API scaffolding for larger applications

- Production-ready FastAPI backends

- Corresponding React frontend components

- Comprehensive API documentation

- Unit tests and validation scripts

- One-click deployment configurations

What Surprised Me Versus College

College trained for correctness; the workplace demanded clarity, iteration, and shipping value under constraints, with mentorship filling the gap between idea and impact. The biggest shift was architectural thinking: in class, models are the destination; in practice, they are one component amid data contracts, retrieval layers, monitoring, and safety.

Key Differences

- Theoretical correctness

- Semester-long projects

- Individual grades

- Isolated problems

- Delayed assessment

- Limited datasets

- Practical impact

- Sprint-based delivery

- Team outcomes

- System integration

- Continuous iteration

- Production data

Culture mattered more than expected—being encouraged to ask for help, experiment, and own outcomes made the difference between building features and building systems.

The Role of Mentorship

Mentor Vishwa's guidance grounded the journey: breaking down ambiguous requirements, insisting on measurable wins, and modeling how to trade elegance for reliability when it counts. From reviewing graph schemas to shaping evaluation criteria and prompting strategies, the mentorship ensured learning never drifted from real-world outcomes.

Mentorship Impact

- Code reviews focused on production readiness

- Architecture discussions for scalable systems

- Best practices for AI/ML deployment

- Performance optimization techniques

- Stakeholder communication skills

- Project management methodologies

- Technical documentation standards

- Cross-functional collaboration

- Industry trends and future opportunities

- Skill development roadmaps

- Network building strategies

- Leadership development

That balance—freedom to explore with accountability to deliver—defined the cadence of the internship and the confidence to tackle complex problems.

Skills That Stuck

Technical Skills

Systems Thinking Systems thinking displaced single-model thinking: design for retrieval, grounding, observability, and human-in-the-loop feedback from day one. Every AI system became a pipeline with multiple components, each requiring careful consideration of inputs, outputs, and failure modes.

Product Sense Product sense matured: always tie model choices to user needs, latency budgets, and maintenance realities rather than leaderboard deltas. The best technical solution isn't always the right business solution.

Communication as a Technical Skill Communication became a technical skill—writing docs, visualizing graphs, and narrating tradeoffs to align stakeholders across engineering, product, and business.

Soft Skills

- Cross-functional project coordination

- Effective communication with diverse stakeholders

- Conflict resolution and consensus building

- Knowledge sharing and mentoring others

- Breaking down complex problems into manageable components

- Iterative development and continuous improvement

- Risk assessment and mitigation strategies

- Creative thinking within constraints

- Rapid skill acquisition in new technologies

- Adapting to changing requirements and priorities

- Learning from failures and setbacks

- Staying current with industry trends

What's Next: The Future of AI

Graph-Powered RAG Evolution Graph-powered RAG will continue evolving into agentic patterns with graph algorithms, better indexing, and task-aware planners that can traverse knowledge with purpose. The integration of temporal reasoning and multi-modal capabilities will unlock even more sophisticated use cases.

LLM Stack Optimization LLM stacks will get leaner and more domain-tuned as open models like Llama 3 enable controllable, cost-effective deployments without sacrificing capability. Edge deployment and specialized hardware will make AI more accessible and efficient.

Code Generation Advancement Prompt-to-code tooling will move toward guardrailed, test-aware generation, closing the loop between specification, scaffolding, and verifiable software behavior. Integration with existing development workflows will become seamless.

Three Months, Three Builds, One Mindset

In three months at NTT DATA, the journey moved from theory to throughput: a graph-based RAG system for enterprise knowledge, a voice-driven stock assistant for retail investors, and a prompt-to-API engine to turn ideas into code.

Project Summary

- Technology Stack: Neo4j, Groq LLM, TypeScript

- Impact: Enterprise knowledge management with 40% accuracy improvement

- Technology Stack: Llama 3, yfinance, React

- Impact: Democratized financial insights for retail investors

- Technology Stack: FLAN-T5, FastAPI, Next.js

- Impact: Accelerated prototyping and reduced development time

The constant thread was a people-first culture and hands-on mentorship that turned complex ideas into shipped systems, and shipped systems into learning that lasts. It was different from college in all the best ways—faster, clearer, kinder—and it set a bar for what learning on the job should feel like.

Key Takeaways

- **Mentorship Matters:** Having a dedicated mentor transforms the learning experience

- **Culture Drives Success:** A supportive, growth-oriented culture enables innovation

- **Systems Thinking:** Real-world AI requires thinking beyond individual models

- **Continuous Learning:** The field evolves rapidly; adaptability is crucial

- **Impact Over Complexity:** Simple solutions that solve real problems win

Gratitude and Looking Forward

The three months at NTT DATA were transformative, providing not just technical skills but also a new perspective on what it means to build AI systems that matter. The experience reinforced my passion for AI/ML engineering while highlighting the importance of human-centered design and ethical AI development.

Special thanks to mentor Vishwa for the guidance, the entire NTT DATA team for the welcoming culture, and my fellow interns for the collaborative spirit that made every challenge an opportunity to learn and grow.

As I continue my journey in AI/ML, the lessons learned at NTT DATA will serve as a foundation for building systems that are not just technically impressive, but genuinely useful and impactful for real users and real problems.

---

This internship experience reinforced that the future of AI lies not just in more powerful models, but in better systems, stronger teams, and clearer purpose. The journey from campus curiosity to real-world AI impact continues.

Enjoyed this article?

Connect with me to discuss more about AI, technology, and innovation.